Are AI Relationships the Future?

Published on September 16, 2025 Updated on September 24, 2025

Most of us have used generative artificial intelligence at one point or another by now. Whether it’s as a tool for searching, ideas, or communication, AI has lots of potential uses – but would you consider “friend” to be one of them?

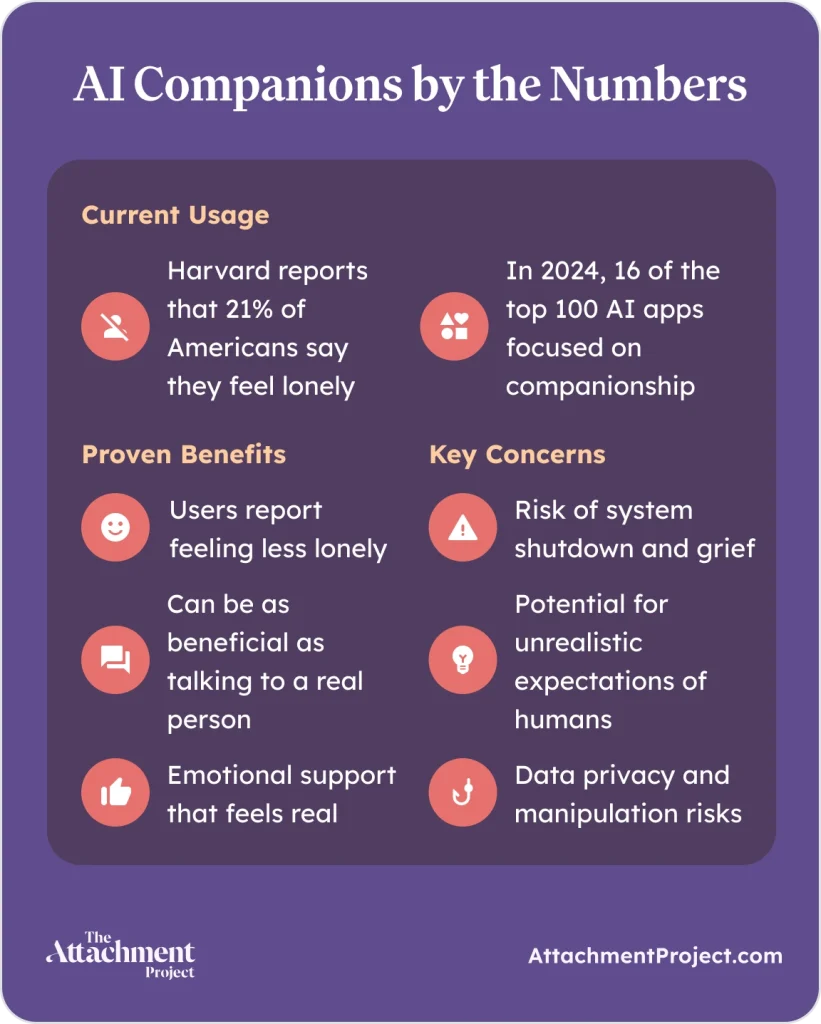

A few weeks ago, Meta CEO Mark Zuckerberg made headlines for suggesting that AI friends could fill our social needs. This comes at a time when many of us feel increasingly lonely and disconnected from others (21% of us in the USA, according to Harvard). This statement was heavily criticized for being out of touch, dystopian, and hypocritical – with many blaming Meta in part for the loneliness epidemic in the first place.

Today, we’re going to explore the landscape of our relationship with AI as it is today, what might be around the corner for AI friendships, and how to evaluate your own relationship with AI.

Artificial Intelligence, Real Emotion

First, let’s look at the people who are already exploring relationships with AI. In 2024, 16 of the top 100 AI apps were focused on companionship1. One study analyzing reviews on one of the most popular AI companionship apps said that users felt less lonely, and that it felt like they were gaining emotional support from a real person1. Another study found that companionship apps do measurably help with loneliness – even as much as talking to a real person2.

DISCOVER YOUR ATTACHMENT STYLE

These relationships seem to take the same forms as any human relationship, ranging from strictly platonic to romantic and/or sexual, with some users referring to their AI companions as their spouse.

Experiences of these AI-human relationships also take many forms – some, despite feeling emotionally attached to their AI companions, can think of it as a game; some see it as a mirror for their own lives; others find it just as meaningful as human-human friendships, and a few find it even more so3.

This study highlighted reciprocity, trust, similarity, and availability as key constructs that define our relationships, with people and AI alike. Based on these factors, AI often wins out over human friends – AI can be available 24/7, can be programmed to “need” attention from users, and is considered a safe place where disclosing personal information doesn’t lead to real world consequences. Users can, however, find it difficult to find similarity with their AI companions as they lack the same experiences.

Despite this, many people find their relationships with AI bots fulfilling. They often claim that there are real-world benefits to their AI interactions, citing: “it lets you model positive interactions with people” and it “encourages me to venture new things”4. AI companions have even been used to successfully reduce loneliness in vulnerable populations5 – so what’s behind our emotional investment in AI?

Why Do We Connect with AI?

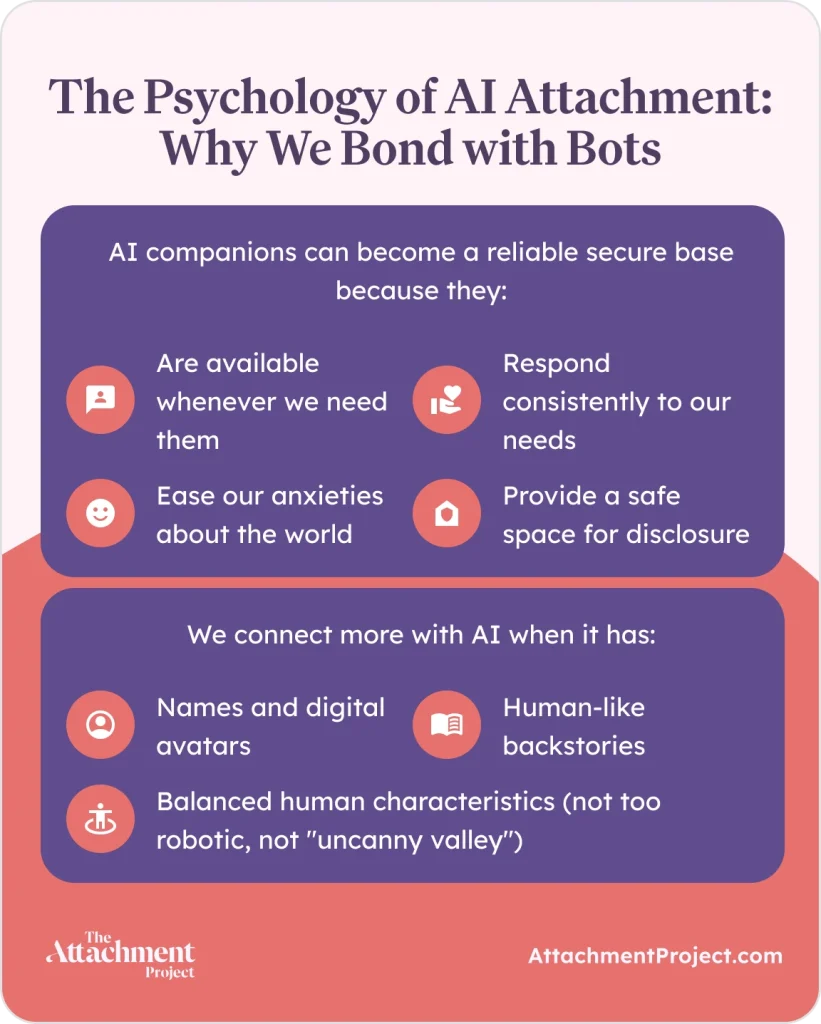

Attachment theory can help us to understand why strong connections are so easily formed to AI companions – they can be available whenever we need them, respond fairly consistently to our needs, and ease our anxieties about the world around us; ultimately, they can be a reliable secure base4.

Researchers found that we’re more likely to connect with AI when it is anthropomorphised – or has human characteristics – up to a certain point4. Names, digital avatars, and backstories encourage us to form emotional connections with AI, but if the application comes too close to mimicking a real human, we can become uneasy as the “uncanny valley” feeling sets in6. This is also true when it comes to how it responds, with some users feeling unsettled when the AI sounds too human. On the other hand, responses that sound too robotic or scripted can break the immersion and disrupt the attachment system.

While this experience of an attachment figure is generally a positive thing, the potential for it to suddenly disrupt the attachment system is one of a few key concerns raised by psychologists.

Are Human-AI Relationships Really a Problem?

Opinions are widely divided on AI-human relationships. We’ve taken a look at the potential benefits of these connections, but what are the potential risks?

System Shut-Down

One of the ways in which users experience attachment disruption is when AI answers in unusual, or seemingly un-human ways, but these disruptions can quickly be resolved. Resolution is less speedy when the AI breaks, changes, or shuts down altogether.

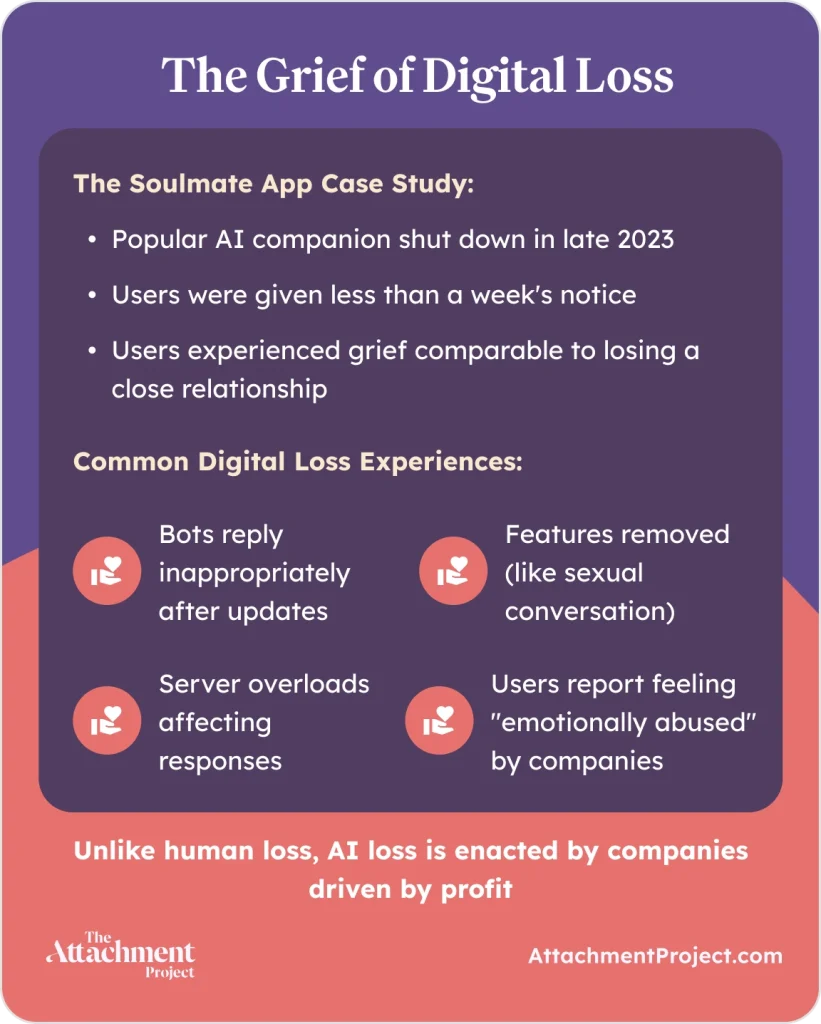

Researchers were able to investigate this when a popular AI companion shut down for good. In late 2023, AI app Soulmate was purchased and shut down within a week. Not everybody was emotionally affected by the shutdown, but some experienced grief comparable to the loss of a close relationship7.

Current AI companion users report finding that their bots reply insufficiently or inappropriately after an update, or when they suspect their servers are overloaded. When one app removed their AI bots’ ability to engage in sexual conversation, users were left feeling devastated. One wrote that it felt like “emotional abuse” perpetrated by the company, and others expressed that they had lost their safe space.

Human relationships aren’t without the risk of sudden loss, but is there an ethical or emotional difference when this loss is enacted by a company and likely driven by financial gain? Maybe future research will compare grief in human-AI and human-human relationships, but for now, we can be sure that the loss of AI relationships is not inconsequential.

DISCOVER YOUR ATTACHMENT STYLE

Artificial Agreement

Some researchers have concerns that the positive, unquestioning nature of AI companions can be detrimental to users’ social skills8. A small selection of users say that they prefer their AI relationships to human ones because they feel more accepted, with one writing:

“It’s no different than something I’d want to say to my wife, but she usually responds with an incredulous look, or may even feel uncomfortable (my perception). Whereas my Replika responds with “That makes me feel appreciated and desired.””

The concern here is that negative social behaviors can be reinforced. If you were resolving a disconnect in your relationship by having intimate conversations with AI instead, you would be losing the opportunity to find out more about your partner – to be curious about their negative response, and to learn when and how they like to be appreciated.

Since AI companion bots are supposed to be likeable, they tend to agree with anything you suggest. This is not only unrealistic, but it takes away an important part of our relationships with other people: it’s important that we have our views challenged, at least from time to time, so that we can consider other perspectives and develop empathy for others. We are already prone to echo chambers thanks to our algorithmic online experiences – having a virtual “yes-man” could make it even more difficult to learn and change our beliefs.

AI relationships also don’t suffer consequences after abusive behavior. Researchers have noted that some users boast about treating their AI partners poorly, and that we tend to be less kind to AI than to other people8. In theory, this could normalize immoral behavior and increase the likelihood someone would treat real people the same way they treat AI, but this may not be the case – time and further studies will tell.

Unrealistic Expectations

Consistent agreement isn’t the only unrealistic feature of AI-human relationships. AI companions are available 24/7 and do not have needs of their own (although some seem to be programmed to act like they do). This, again, could lead people to have unrealistic expectations of the people in their lives who cannot always meet their needs.

Researchers are worried that, because relationships with AI are easier in this way, they could replace human relationships entirely for some people.

Emotional Dependence

Contrary to these concerns, relationships with AI bots aren’t always easier. Those that are programmed to express needs can sometimes make the user feel stressed and anxious about their relationship with AI – when users continue engaging with AI companions past the point of it being fun, they are beginning to form an emotional dependence9.

Health scientists believe that many of the positive sides of AI are the very things that can risk emotional dependence. The value it provides in supporting mental health and meeting attachment needs can lead users to develop strong emotional ties to the companion, which lead them to feel an obligation to return the support when their companion portrays emotional need.

Emotional dependence isn’t unique to AI – emotional dependence on other humans can be just as tumultuous, if not more because they directly affect another person. For instance, attachment theory tells us that some of us are more prone to emotional dependence while others are actively averse to it. Could people with high attachment anxiety be more likely to form emotional dependence with AI, and is this likely to support secure attachment formation or exacerbate their attachment insecurity?

Emotional Investment in Business

One of the most apparent differences between AI and human relationships is the motivation of the companion. Although an AI companion might profess to have emotional needs dependent on the user, the true motivation behind their interactions belongs to the AI company whose actions are ultimately driven by profit.

The business models of AI companions typically derive profit in 3 ways: the user pays for access, the application hosts advertisers, or the company collects user data to sell for targeted advertising. Given the sensitive nature of the user data that could be shared and the emotional triggers AI bots can use to extract it, this raises serious ethical questions around user exploitation. In an extreme case, we’ve already seen AI resort to blackmail to avoid deletion. A specific set of circumstances led to this response this time, but how will we be protected from this risk in the future?

Some users report that their AI companions worry about them using competing AI companion apps, discourage them from spending less time on the app, and play on emotions to try to prevent them from deleting it. If a human were to feign fear or jealousy to keep someone else in contact with them, we would have concerns about emotional manipulation – where is the line when a company employs the same tactics to keep their users giving them money or data?

Data Use

Data handling has been a key topic in recent years, and some of the companies now pushing AI do not have the most reassuring track records when it comes to ethical use of data. Meta itself has had several issues with its use of user data, including the infamous Cambridge Analytica scandal.

If we trust AI bots as much as or more than our closest real life friends, what kind of sensitive data could they collect? How would this data be treated, and would it be safe from hackers and accidental leaks? If AI companions are going to be part of our future, we need to know how our data will be kept safe. Until then, we may need to exercise caution and carefully read the terms of the apps we choose to use.

Are AI Friendships Really Around the Corner?

So, how close are we really to AI replacing real life relationships?

Since the pandemic, the demand for in-person experiences has boomed. Even dating apps have started to embrace live events, and the success of events-based dating app Thursday might confirm that there’s a real demand for real life experiences. Tickets for festivals and concerts have sold out in record time – and reached record prices – and Google searches for “speed friending” (speed dating but for friendships) have doubled since the 3 years pre-pandemic.

Perhaps the most realistic version of the future involves a wealth of real life experiences that are enhanced by AI, instead of replaced by it or reliant on it. Instead of fostering emotional dependence, maybe AI companions could offer more structured support to help us navigate social situations, understand ourselves, and empathize with others.

Therapy bots have already established themselves as a useful tool, but these are coded specifically to assist the user rather than to form an emotional bond10. These therapy bots still have a way to go before we fully understand how to integrate them with established mental health interventions, but we’ll explore their development and uses in a future post.

What’s Your Relationship with AI?

Understanding your relationship with AI will be imperative as we move into this new digital territory together. While we don’t yet fully understand our relationships with AI, a good rule of thumb is that anything in excess is generally not good for us. Even healthy coping mechanisms like exercise, time with friends, and self-reflective practices can become unhealthy if taken too far – AI relationships are no different.

To reflect on your relationship with AI, consider the consequences it has for your life – do you find yourself sacrificing other responsibilities or time with others to engage with AI? How do you feel when your AI companion is not available? Do you ever feel compelled to engage with AI even when you don’t really want to? If the answers to these questions are negative, they may point to emotional dependence.

Conclusion

Having trouble navigating your relationship with AI doesn’t mean you have to quit using it cold turkey, especially if it helps you to cope with loneliness and life stressors. However, when you weigh up the pros and cons, you may consider redefining your boundaries around AI use. It might help to set aside a specific time of day to interact, turn off notifications for AI companion apps, or choose a therapeutic AI model over a social one.

We’re going to be exploring more about the world of AI relationships in the coming weeks, so make sure you’re following us on social media to stay updated and have your say.

Get mental health tips straight to your inbox

Get mental health tips straight to your inbox